Members:

Abstract:

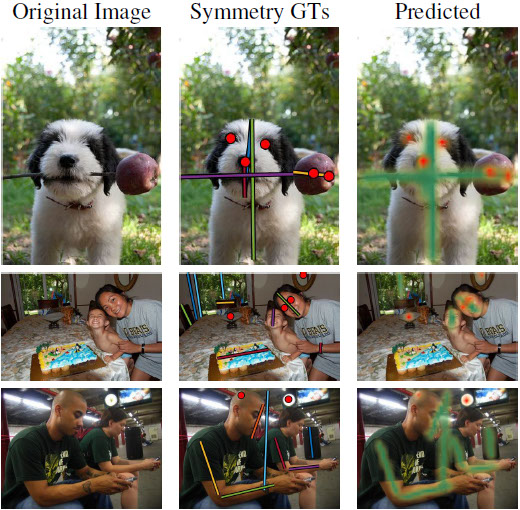

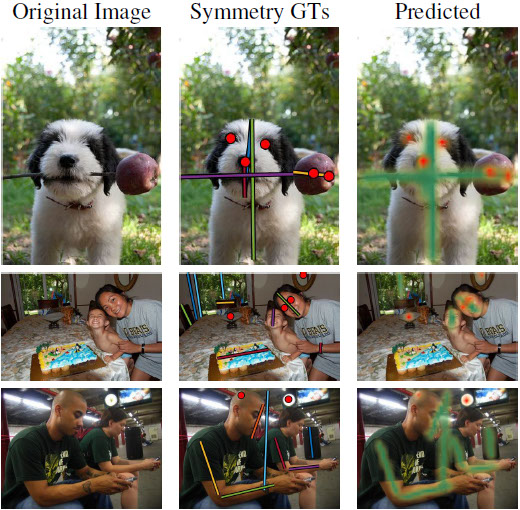

Humans take advantage of real world symmetries for various tasks, yet capturing their superb symmetry perception mechanism into a computational model remains elusive. Encouraged by a new discovery (CVPR 2016) demonstrating extremely high inter-person accuracy of human perceived symmetries in the wild, we have created the first deep-learning neural network for reflection and rotation symmetry detection (Sym-NET), trained on photos from MS-COCO (Common Object in COntext) dataset with nearly 11K symmetry-labels from more than 400 human observers. We employ novel methods to convert discrete human labels into symmetry heatmaps, capture symmetry densely in an image and quantitatively evaluate Sym-NET against multiple existing computer vision algorithms. Using the symmetry competition testsets from CVPR 2013 and unseen MS-COCO photos, Sym-NET comes out as the winner with significantly superior performance over all other competitors. Beyond mathematically well-defined symmetries on a plane, Sym-NET demonstrates abilities to identify viewpoint-varied 3D symmetries, partially occluded symmetrical objects and symmetries at a semantic level.

Resources: [PDF]

[Supplemental] [Video of Oral Presentation]

Networks are designed to work with DeepLab V2 (link)

Sym-VGG Reflection:

[Caffemodel]

[Prototxt]

Sym-ResNet Reflection:

[Caffemodel]

[Prototxt]

Sym-VGG Rotation:

[Caffemodel]

[Prototxt]

Sym-ResNet Rotation:

[Caffemodel]

[Prototxt]

For our experiments,

- ${NUM_LABELS} is set to 1 for our networks since our experiment only had 1 label (either reflection or rotation heatmaps). The output is a heatmap which is scaled to be [0,1] as a post-processing step (the network may output a larger range).

- ${EXP} is the name of the experiment ('ref_sym' or 'rot_sym') depending on the label. (You will see the layers as being ignored in the caffe output if you get this wrong).

- "data" layer defines where the image list is located. It should automatically read the list of images. Make sure it is in the same format as the other Deeplabv2 experiments (each line is a new image filename).

- root_folder is the root of the data

- source is the path to the list of images

- "im_mat" layer defines where the output of the image from the network (useful for debugging)

- "fc*_interp" defines where the output of the networks goes. The * is a number and depends on the network (VGG and ResNet have different output layers).

The easiest way to use these is to setup the same folders as DeepLab V2 since they have some easy commands to rename all of these for you automatically.

Images, labels, and a demo will come soon.

Acknowledgment:

This project is a Penn State funded by a National Science Foundation (NSF) Creative Research Award for Transformative Interdisciplinary Ventures (CREATIV)

INSPIRE: Symmetry Group-based Regularity Perception in Human and Computer Vision (NSF IIS-1248076)